Photo giant Getty took a leading AI image-maker to court. Now it’s also embracing the technology

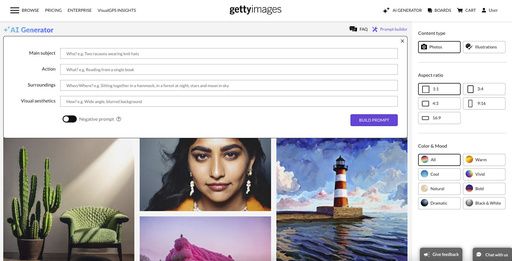

This photo provided by Getty Images shows an example of the company’s artificial intelligence image-generator. The Seattle-based photo stock company is taking a two-pronged approach to the threat and opportunity that AI poses to its business. On Monday, Sept. 25, 2023 it joined the small but growing market of AI image makers with a new service that enables its customers to create novel images trained on Getty’s vast library of human-made photos. (Getty Images via AP)

Anyone looking for a beautiful photograph of a desert landscape can find many choices from Getty Images, the stock photography collection.

But say you’re instead looking for a wide angle shot of a “hot pink plastic saguaro cactus with large arms that stick out, surrounded by sand, in landscape at dawn.” Getty Images says you can now ask its artificial intelligence image-generator to make one on the spot.

The Seattle-based company is taking a two-pronged approach to the threat and opportunity that AI poses to its business. First, it sued a leading purveyor of AI-generated images earlier this year for what it alleged was “brazen infringement” of Getty’s image collection “on a staggering scale.”

But on Monday, it also joined the small but growing market of AI image makers with a new service that enables its customers to create novel images trained on Getty’s own vast library of human-made photos.

The difference, said Getty Images CEO Craig Peters, is this new service is “commercially viable” for business clients and “wasn’t trained on the open internet with stolen imagery.”

He contrasted that with some of the first movers in AI-generated imagery, such as OpenAI’s DALL-E, Midjourney and Stability AI, maker of Stable Diffusion.

“We have issues with those services, how they were built, what they were built upon, how they respect creator rights or not, and how they actually feed into deepfakes and other things like that,” Peters said in an interview.

In a lawsuit filed early this year in a Delaware federal court, Getty alleged that London-based Stability AI had copied without permission more than 12 million photographs from its collection, along with captions and metadata, “as part of its efforts to build a competing business.”

Getty said in the lawsuit that it’s entitled to damages of up to $150,000 for each infringed work, an amount that could theoretically add up to $1.8 trillion. Stability is seeking to dismiss or move the case but hasn’t formally responded to the underlying allegations. A court battle is still brewing, as is a parallel one in the United Kingdom.

Peters said the new service, called Generative AI by Getty Images, emerged from a longstanding collaboration with California tech company and chipmaker Nvidia that preceded the legal challenges against Stability AI. It’s built upon Edify, an AI model from Nvidia’s generative AI division Picasso.

It promises “full indemnification for commercial use” and is meant to avoid the intellectual property risks that have made businesses wary of using generative AI tools.

Getty contributors will also be paid for having their images included in the training set, incorporated as part of royalty obligations so that the company is “actually sharing the revenue with them over time rather than paying a one-time fee or not paying that at all,” Peters said.

Expected users are brands looking for marketing materials or other creative imagery, where Getty competes with rivals such as Shutterstock, which has partnered with OpenAI’s DALL-E, and software company Adobe, which has built its own AI image-generator Firefly. It’s not expected to appeal to those looking for photojournalism or editorial content, where Getty competes with news organizations including The Associated Press.

Peters said the new model doesn’t have the capacity to produce politically harmful “deepfake” images because it automatically blocks requests that show recognizable people or brands. As an example, he typed the prompt “President Joe Biden on surfboard” in a demonstration to an AP reporter and the tool refused the request.

“The good news about this generative engine is it cannot produce the Pentagon getting bombed. It cannot produce the pope wearing Balenciaga,” he said, referencing a widely shared AI-generated fake image of Pope Francis dressed in a stylish puffer jacket.

AI-generated content also won’t be added to Getty Images content libraries, which will be reserved for “real people doing real things in real places,” Peters said.